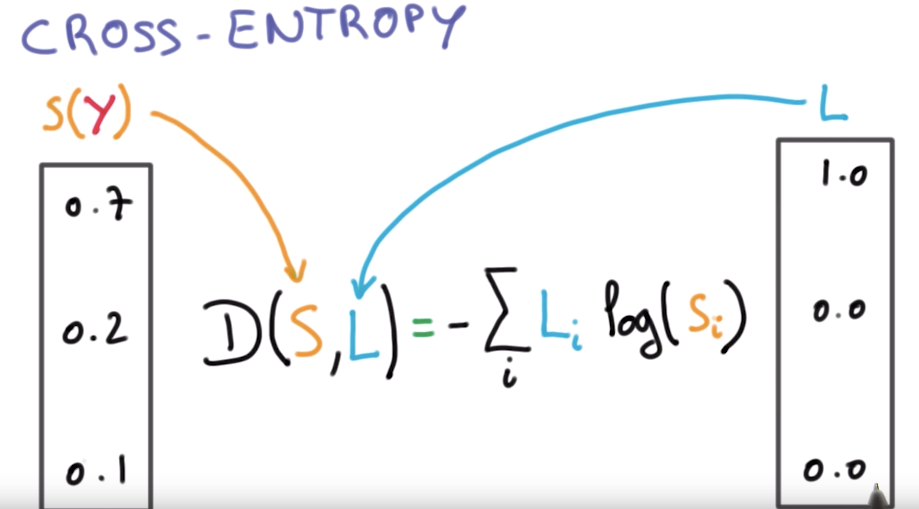

This is the most common Loss function used in Classification problems. Which type of learning loss is the most common?ġ. The most appropriate activation function for the output neuron(s) of a feedforward neural network used for regression problems (as in your application) is a linear activation, even if you first normalize your data. What is the best activation function for regression? If you’re doing binary classification and only use one output value, only normalizing it to be between 0 and 1 will do. softmax) to ensure that the final output values are in between 0 and 1 and add up to 1. Yes we can, as long as we use some normalizor (e.g. Does Tanh as output activation work with cross entropy loss? A perfect model would have a log loss of 0. 012 when the actual observation label is 1 would be bad and result in a high loss value. What is a good cross entropy loss score?Ĭross-entropy loss increases as the predicted probability diverges from the actual label. Each predicted class probability is compared to the actual class desired output 0 or 1 and a score/loss is calculated that penalizes the probability based on how far it is from the actual expected value. How does cross entropy loss work?Īlso called logarithmic loss, log loss or logistic loss. Cross-entropy can be used as a loss function when optimizing classification models like logistic regression and artificial neural networks. Why do we use the cross entropy cost function for neural networks?Ĭross-entropy is a measure from the field of information theory, building upon entropy and generally calculating the difference between two probability distributions.

9 What do you mean by model validation?.

7 What does loss mean in deep learning?.6 Which type of learning loss is the most common?.5 What is the best activation function for regression?.4 Does Tanh as output activation work with cross entropy loss?.3 What is a good cross entropy loss score?.1 Why do we use the cross entropy cost function for neural networks?.

0 kommentar(er)

0 kommentar(er)